Over the past year I have spent a lot of time with customers, partners, in workshops, and in board meetings where the same question keeps appearing in different forms: Where does AI actually sit in our technology landscape? How much will it change what we already have in place? And where will AI create value for us now and into the future?

I understand the unease. For decades, enterprises have invested heavily in technology stacks engineered for predictable data flows, reliable integrations, and sharp, well-defined boundaries between systems. Technology was deterministic, systematic, and comfortably controllable. Those boundaries are now dissolving.

We are entering an era in which every layer of the stack is quietly, steadily absorbing intelligence—not as a bolted-on feature or an afterthought, but as a native, inseparable part of how modern technology functions. The result is a new kind of infrastructure that can reason, adapt, and solve abstract problems on its own or in collaboration with us humans. What felt certain yesterday is becoming fluid today. This change is already underway, whether we’re ready or not.

What I am seeing is a new mindset emerging. Leaders are no longer asking whether they should use AI. They are asking how to reorganise their technology foundations to support it.

The stack is no longer static

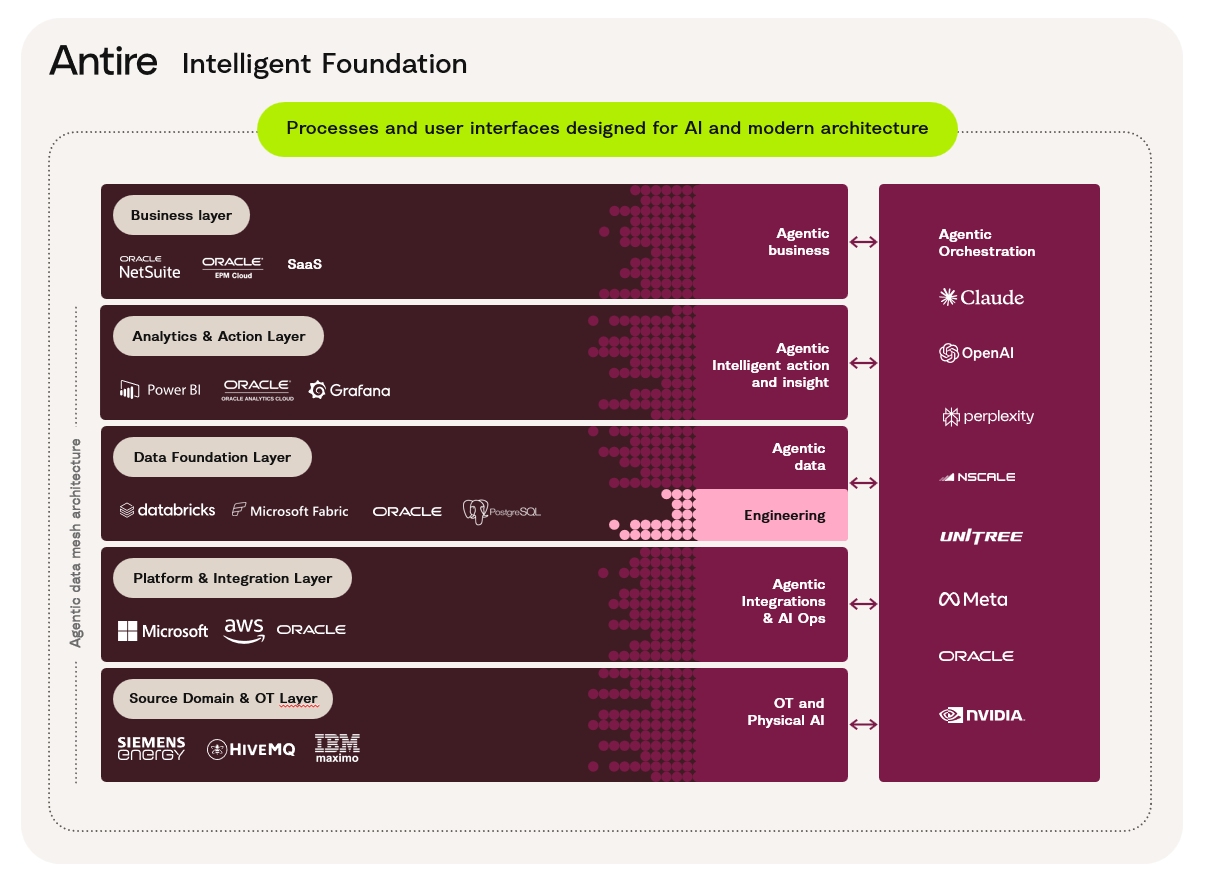

When I talk with CIOs or CTOs, I often sketch a simple picture. We used to think about technology in layers: operations at the bottom, cloud and integration above it, then data, analytics, and the business applications on top. Most companies still work with some version of this model.

But the reality has changed. Each of these layers now has its own AI evolution curve.

- OT systems are becoming intelligent, with models running closer to the physical world.

- Integration platforms are adding vector search, embedding services and real-time inference.

- Data platforms that used to focus on structure and governance now also have to support embeddings, unstructured data, agent memory and model pipelines.

- Analytics is moving from dashboards to agents that decide and act.

- Business applications, once transaction-driven, are slowly becoming orchestration layers for intelligent processes.

In other words: every layer is becoming more agentic and intelligent, more context-aware, and more autonomous.

Illustration: Processes and user interfaces designed for Al and modern architecture.

Why this matters for leaders

There is useful data behind this transition. One recent industry survey found that 86% of enterprises believe they must modernise parts of their tech stack to deploy AI agents properly. Another study shows that most companies experimenting with AI struggle not because of models, but because their underlying architecture was not built for continuous, interconnected intelligence.

This matches what I see every week. Companies run successful pilots, but the moment they try to scale, the stack starts to resist. Latency issues appear. Costs rise above the value added. Data pipelines cannot support the workload. Integrations break because they were never designed for bidirectional, real-time logic. Business systems cannot keep up with the demand for context-dependent actions.

It is not a model problem. It is an architecture balance problem.

The defining question for the journey ahead

A good starting point for leadership teams is not to ask “Where should we use AI?” but “How should our technology stack behave when AI becomes a built-in part of everything?”

This shift in thinking is important. It forces organisations to look at balance.

- How mature is each layer?

- Where does AI take the lead?

- Where must traditional components still play the primary role?

- Where is the risk of over-rotation

- How do we accommodate for the flat cost profile of AI?

- And where is the opportunity to let agents, data, robotics and systems collaborate in new ways?

The companies that progress fastest are the ones that view their stack as a living system rather than a fixed structure. They accept that AI will not arrive evenly. Some layers will mature earlier. Some will need to be protected longer. And that balance will keep evolving.

What I believe is coming next

Based on the conversations I am having with customers, and the work our teams are doing across industries, I believe three things will shape the next phase.

- Full vector ecosystems

Most organisations will need a foundation that supports embeddings, retrieval, context awareness and agent memory. Without this, AI stays isolated inside the pilot stage. - Agentic workflows across business systems

ERP, CRM and operational systems will increasingly rely on agents to surface insights, trigger actions and coordinate processes. Good data alone will not be enough. - Architecture that adapts

Static integration patterns will not survive. Companies will need platforms and pipelines that are flexible, observable and governed, but also capable of supporting autonomous behaviour without losing control.

A responsibility to guide, not to hype

As a CEO, I feel a responsibility to help leaders bring clarity to something that can easily feel overwhelming. My goal is not to sell complexity, but to simplify what matters. I often say that AI does not replace your architecture, but it changes the meaning of it. It forces us to think about flow, context, trust and outcomes in a new way.

And it asks each company to define how much intelligence it wants embedded in each layer, and how much it is comfortable delegating to systems, models and agents—now and going forward as AI matures.

Bridging goals with trusted AI

No organisation should navigate this transformation alone. But the work cannot start with tools or trends. It has to start with understanding your stack, your people, your data and your ambition. When those are aligned, AI becomes an accelerator rather than a stress factor.

This is the conversation I look forward to having with more leaders in the months ahead. It is clear that the future architecture will be more intelligent, more connected and more dynamic than anything we have built before. The opportunity is enormous. The challenge is to approach it with clarity and trust.

And that is where I believe we can make a real difference.